Cet article est également disponible en français.

Cet article est également disponible en français.

The context

Many of you are certainly aware of the fact that somebody succeeded in stealing LinkedIn a 6.5 million password SHA-1 file lately. In practice, those were not clear passwords but hashed ones. However, the algorithm had been used in an unsecure way, namely without salt.

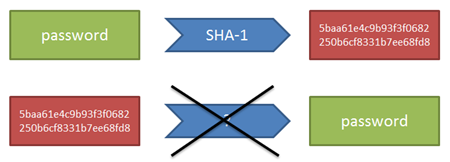

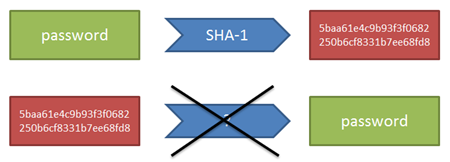

To give a very quick explanation to beginners, the principle of a hash is to transform a word into another hardly readable, without there being a mathematical way to inverse the transformation. The hash for “password”, for exemple, is “5baa61e4c9b93f3f0682250b6cf8331b7ee68fd8”, and there does not exist a theoretical way to retrieve “password” from there.

This capability is interesting for storing passwords, because it guarantees that, if the hash is the only thing stored, it is still possible to evaluate a future password by comparing its hash with the reference hash. And yet, even the database administrator cannot view the clear password.

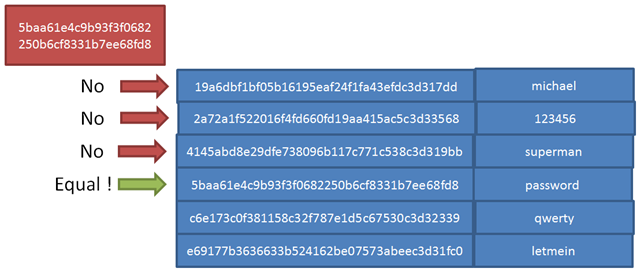

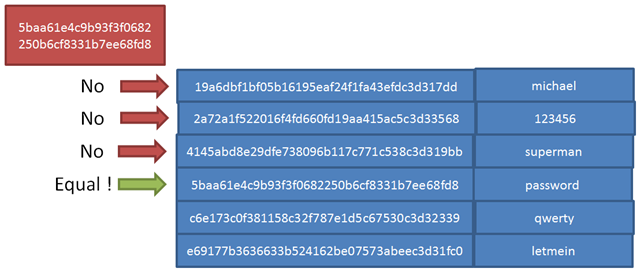

This is for theory. In practice, well… there is a flow in the deterministic way of a hash algorithm. Since “password” will always result in a hash of “5baa61e4c9b93f3f0682250b6cf8331b7ee68fd8” (and this is quite necessary, otherwise we could not use it for password hashes comparison and thus authentication), that means it is possible to pre-compute hash dictionaries based on current passwords, and then directly compare hash values, in order to try and deduce the corresponding password.

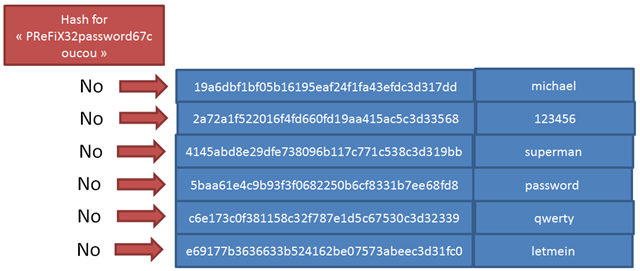

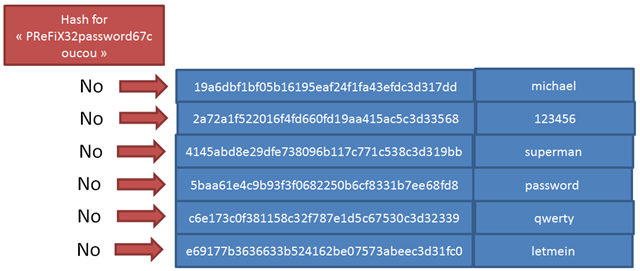

The countermeasure there is to use what is called a salt. Salting the hash algorithm consists in adding well-known “noise” around the password. This way, instead of hashing “password”, we would for example compute the hash for “PReFiX32password67coucou”, for which there are far smaller chances of finding the value in a hash dictionary. As far as password validation, no problem, since the authentication module knows the salt to be added before computing the hash.

The mistake from LinkedIn was to not add any salt to their use of SHA-1 hash method (or at least at some point in time, when this file was extracted). So, when the file in question has leaked, anybody was able to find known passwords there, like “password”, “123456”, etc.

The hypothesis

Obviously, this is a mistake from LinkedIn. It is all nice and well to explain that their team hosts some security experts (http://blog.linkedin.com/2012/06/09/an-update-on-taking-steps-to-protect-our-members/), but if they forget to use a salt – which any decent security-oriented programmer knows about – no use to make too much fuss around that…

Yet, I am making the hypothesis that a salt, applied uniquely over the whole file, would in fact not have changed much to the situation.

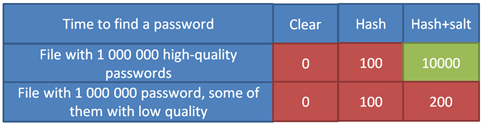

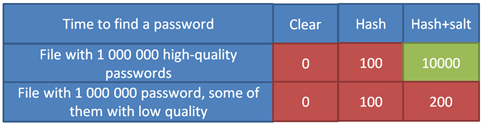

Let me explain myself. In such a huge file with 6.5 millions passwords, there are all chances to find a large number of “common” passwords, like “password”, “123456”, etc. Which means that, if the hash method is unique, a brute force attack on the salt, while restraining to only these targets passwords, could be enough to find its value. This of course will take some time, but I make the guess that it would only multiply attack time by two instead of the number of possible strings. In short, using relative time units, we would have :

I have written this long article to relate my path through different experimentations along this hypothesis. The code is in C#, but will be easily understandable, whatever your language background.

Finding the files

Before being able to toy with them, I had to find the file in question. The original link does not work anymore :

But if you follow the conversations on https://news.ycombinator.com/item?id=4073309, you will undoubtedly find a copy without any sweat… I imagine there are now thousands in the world. I do not see the use of removing the initial link, as it is only going to encourage disk space waste for duplicates. And this is pointless, as the file is now everywhere.

Anyway, this is not the question, and we find another site where to get the file :

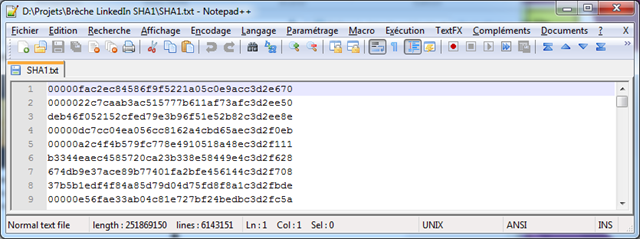

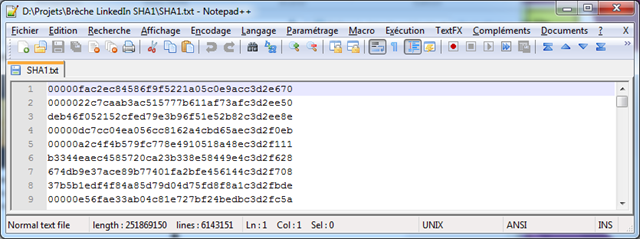

A 20 minutes download later, we can take a peek in the file, and this seems to be the correct one, with some of the hashes beginning with five “zero” caracters, as seems to be an abundantly talked about caracteristics on http://dropsafe.crypticide.com/article/7235.

After that, we download a second file wich contains a dictionary of common passwords, found on DazzlePod, and we start writing a quick test in order to try and retrieve the most common values :

[TestMethod]

public void PresenceOfSimplePasswordsInReferenceFile()

{

List<string> words = new List<string>() { "password", "123456", "superman" };

string line;

int nbWordsFound = 0;

int nbAnalyzedLines = 0;

using (StreamReader reader = new StreamReader("passwords.txt"))

{

while ((line= reader.ReadLine()) != null)

{

nbAnalyzedLines++;

if (words.Contains(line))

nbWordsFound++;

if (nbWordsFound == words.Count)

break;

}

}

Assert.AreEqual(words.Count, nbWordsFound);

}

This file contains a little more than two millions passwords. I do not know how they have been gathered, but they correspond to possible passwords, and are not some random-generated characters sets. Anyway, we found the common passwords as expected. We are now going to use this file to lead a targeted attack on the hash values in the LinkedIn file.

Searching SHA-1 hash values, using brute force

The next step consists in searching SHA-1 hash of these passwords in the LinkedIn file :

[TestMethod]

public void PresenceSHA1OfPasswords()

{

List<string> words = new List<string>() { "password", "123456", "superman" };

SHA1 engine = SHA1CryptoServiceProvider.Create();

List<string> hashes = words.ConvertAll(m => BitConverter.ToString(

engine.ComputeHash(Encoding.UTF8.GetBytes(m))).ToLower().Replace("-", string.Empty));

string line;

int nbHashFound = 0;

int nbAnalyzedLines = 0;

using (StreamReader reader = new StreamReader("SHA1.txt"))

{

while ((line = reader.ReadLine()) != null)

{

nbAnalyzedLines++;

if (hashes.Contains(line))

nbHashFound++;

if (nbHashFound == words.Count)

break;

}

}

Assert.AreEqual(hashes.Count, nbHashFound); // Fails

}

We have not found them directly, but this is where we have to take into account the “00000” in prefix. If we replace the prefix, no problem, we found everything we looked for (I only show modified code from listing above) :

List<string> hashes = words.ConvertAll(m => string.Concat("00000", BitConverter.ToString(

engine.ComputeHash(Encoding.UTF8.GetBytes(m))).ToLower().Replace("-", string.Empty).Substring(5)));

OK. Now, we can step up to more serious things, by crossing the entire content of the files, in order to gather all “simple” passwords in the “LeakedIn” file. To do so, we obviously are going to use brute force. And I am really talking about “brute” : I am simply going to stupidly loop over the values, without any optimization. In practice, a malicious person would use more sophisticated tools like John The Ripper (particularly since a dedicated extension for LinkedIn hash manipulation, taking into account the 00000 prefix, has been released).

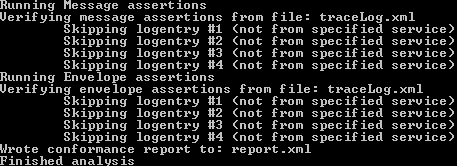

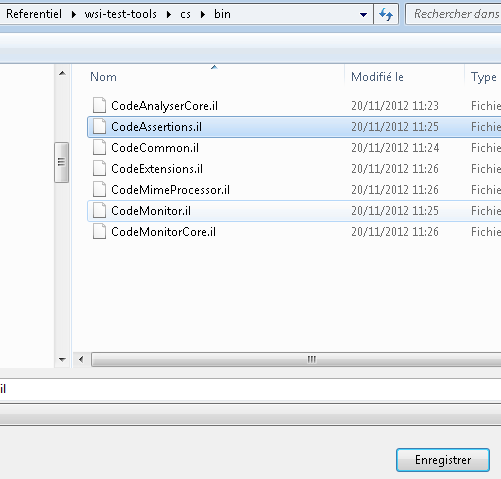

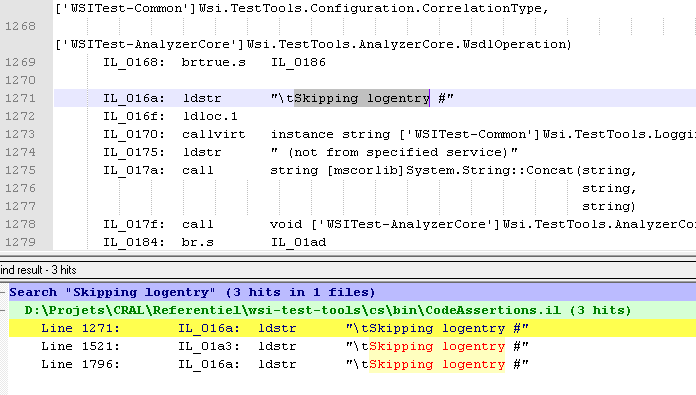

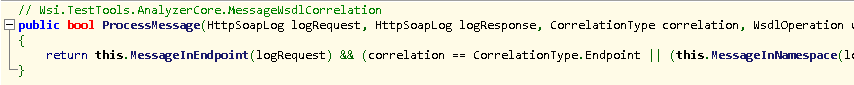

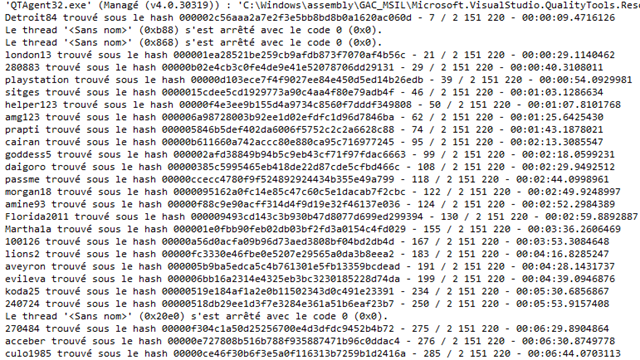

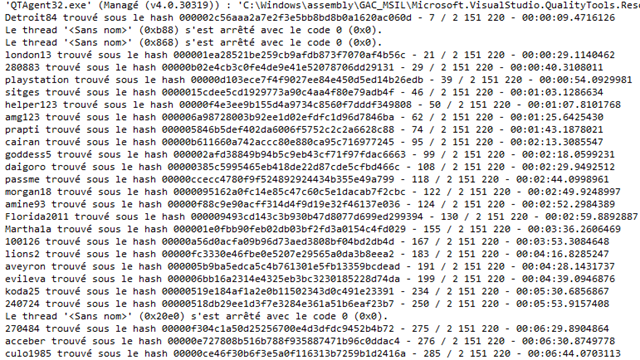

In just the time necessary to type the previous paragraph, this sub-optimal code (although using excellent hardware – Dual Xeon, lots of RAM, SSD) has found already 20 or so passwords (sorry about the screen captures in French – as you most certainly have notived, English is not my native language) :

Normally, letting it run during a night would bring enough passwords to have a statistically large set for my experiments. The code is consequently modified to throw out the results in a CSV file :

[TestClass]

public class TestHashes

{

private SHA1 engine = SHA1CryptoServiceProvider.Create();

private string CalculateReducedHash(string password)

{

return string.Concat("00000", BitConverter.ToString(

engine.ComputeHash(Encoding.UTF8.GetBytes(password))).ToLower()

.Replace("-", string.Empty).Substring(5));

}

[TestMethod]

public void RechercheMotsDePasseUsuelsDansFichierFuiteLinkedInVersionToutEnMemoire()

{

string output = DateTime.Now.ToString("yyyy-MM-dd-hh-mm-ss");

string[] table = File.ReadAllLines("SHA1.txt");

List<string> hashes = new List<string>(table);

string line;

int index = 0;

Stopwatch chrono = Stopwatch.StartNew();

using (StreamReader reader = new StreamReader("passwords.txt"))

{

while ((line = reader.ReadLine()) != null)

{

index++;

string hash = CalculateReducedHash(line);

if (hashes.Contains(hash))

{

Debug.WriteLine(string.Format("{0} trouvé sous le hash {1} - {2} / 2 151 220 - {3}",

line, hash, index, chrono.Elapsed));

File.AppendAllText(output, string.Concat(line, Environment.NewLine));

}

}

}

}

}

Then, we only have to wait. A little less than 24 hours later, the results are as follows :

42 799 hash values have been found in the LinkedIn file, out of 412 116 paswword tested among the 2 151 220 contained in the downloaded password dictionary.

Putting it in second gear

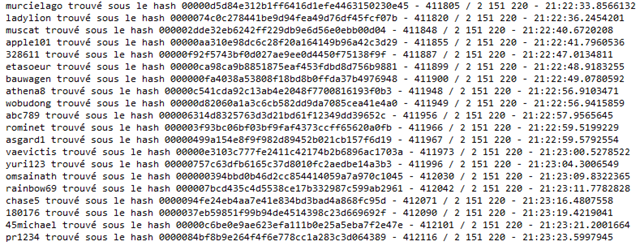

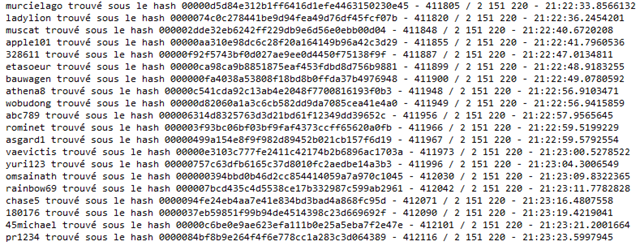

This was quite some fun, but I am not going to leave my computer on for three days just to gather a set of passwords in order to experiment a hypothesis on the presence of salt in hash algorithms ! 40 000 passwords is already quite nice, but I would like to work on the largest size of passwords set, in order to valider as well as possible my idea. The code is then modified in order to use the full eight cores of my CPU.

We are going to use something like this :

[TestClass]

public class TestSHA1Breach

{

private List<string> hashes;

private string identifier;

private string outputDir;

[TestMethod]

public void TestParallelBreachSHA1LinkedIn()

{

identifier = DateTime.Now.ToString("yyyy-MM-dd-hh-mm-ss");

outputDir = string.Concat("ParallelResults-", identifiant);

Directory.CreateDirectory(outputDir);

string[] table = File.ReadAllLines("SHA1.txt");

hashes = new List<string>(table);

using (StreamReader reader = new StreamReader("passwords.txt"))

{

List<Task> tasks = new List<Task>();

int limit = int.MaxValue;

string line;

List<string> words = new List<string>();

while ((line = reader.ReadLine()) != null && limit-- >= 0)

{

words.Add(line);

if (words.Count == 100)

{

Task t = new Task(new Action<object>(Treatment), new List<string>(words));

tasks.Add(t);

t.Start();

words.Clear();

}

}

foreach (Task t in tasks)

t.Wait();

string completeFile = string.Concat("parallel-", identifier);

foreach (string file in Directory.GetFiles(outputDir))

File.AppendAllLines(completeFile, File.ReadAllLines(file));

Directory.Delete(outputDir, true);

}

}

// We could also use BlockingCollection, but the advantage of files is that when the test fails

// in the middle of execution, because of a lock or any other reason, we do not lose every result.

//private BlockingCollection<string> Results = new BlockingCollection<string>();

private void Treatment(object words)

{

SHA1 engine = SHA1CryptoServiceProvider.Create();

List<string> Results = new List<string>();

foreach (string word in words as List<string>)

{

string hash = string.Concat("00000", BitConverter.ToString(

engine.ComputeHash(Encoding.UTF8.GetBytes(word))).ToLower()

.Replace("-", string.Empty).Substring(5));

if (hashes.Contains(hash))

{

Debug.WriteLine(string.Format("{0} trouvé sous le hash {1}", word, hash));

Results.Add(word);

}

}

string outputFile = Path.Combine(outputDir, Guid.NewGuid().ToString());

File.AppendAllLines(outputFile, Results.ToArray());

}

}

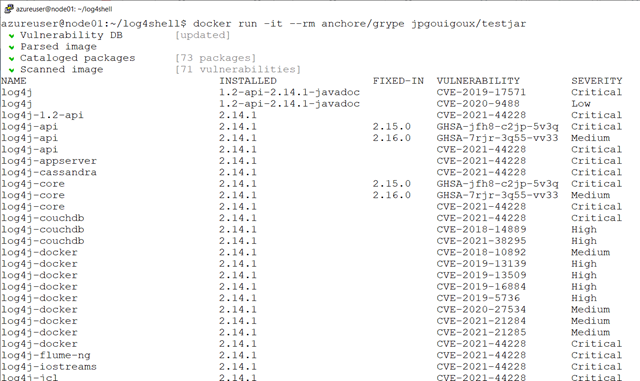

I am not going to get into details, but this code is roughly eight times quicked than the previous one, as it uses all eight cores of my machine. We could of course go even further by using the GPU, but this is quite some work, and since the estimations now are around 15 hours for the whole computation, no use to make this effort. Again, had the goal been to simply crack as many passwords as quick as possible, we would use John The Ripper, which by the way now has a plugin to use OpenCL. With the possibilities of Cloud Computing, I am convinced there are tools somewhere that push the performance even further by massively distribution of the computing.

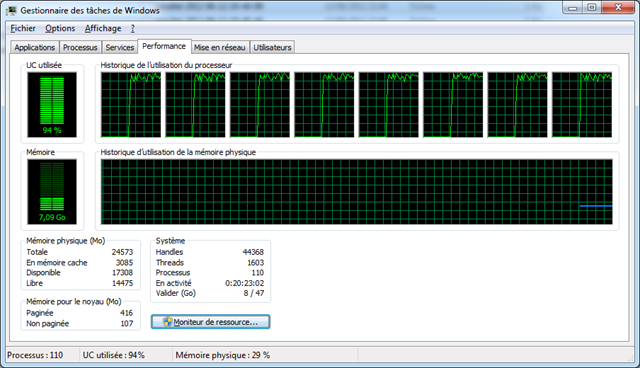

Finally, we obtain the file with all the passwords for which the hash has been found in the LinkedIn file. Out of 6.5 millions hash, this method brought us circa 600 000 passwords, which is quite good, since we can consider that very common ones are highly duplicated. The whole operation lasted 18 hours, to be compared to the 15 hours estimated…

Now to the serious things

The next step brings us closer to the hypothesis to be tested. Until now, we have only gathered data to test it. Now, we are going to use the passwords we found as a set for following tests. To do so, we create a file with the hash values of these passwords, but this time with a salt, and we are going to see if the statistical knowledge of these common passwords indeed helps us realizing a brute force attack not on the passwords themselves, but on the salt used for their hashing.

To operate the test, I use a salt that serves as an example with my students : I only prefix the value buy “zorglub”. I thus recalculate the file with this salt, and we are going to test the proposed method.

For each salt attempted value, we could test only the 25 most common words (see http://splashdata.com/splashid/worst-passwords/index.htm) :

- password

- 123456

- 12345678

- qwerty

- abc123

- monkey

- 1234567

- letmein

- trustno1

- dragon

- baseball

- 111111

- iloveyou

- master

- sunshine

- ashley

- bailey

- passw0rd

- shadow

- 123123

- 654321

- superman

- qazwsx

- michael

- football

The idea is to loop over attempted values of the salt, calculate hash values for these 25 words, and to try and find at least one of the value in the target hash file. If we find one, there is a good chance that we have found the salt, and we can then go back to the traditional brute force attach over passwords that we have demonstrated above as working, even on large volumes.

The statistical frequency of the first words in the list is such that we could use only a few of them. For my tests, I am even going to start with only the most common one (“password”). In fact (and this is the core of the problem in using a unique salt on a set of several millions passwords), the set is so big that we are almost assured of hitting one value corresponding to this common password. The code is the following :

[TestClass]

public class TestSaltAttack

{

private List<string> hashes;

[TestMethod]

public void TestParallelSaltSearch()

{

string salt = "zorglub";

int limit = int.MaxValue;

// Preparing the file with the hash under the chosen salt

string filePasswordBruteForceObtainedFromLinkedIn = "FichierObtenuSansParallelisme.txt";

string fileTargetHash = "FichierHashesSelEnCours.txt";

SHA1 engine = SHA1CryptoServiceProvider.Create();

using (StreamReader reader = new StreamReader(filePasswordBruteForceObtainedFromLinkedIn))

using (StreamWriter scribe = new StreamWriter(fileTargetHash))

{

string line;

while ((line = reader.ReadLine()) != null)

{

scribe.WriteLine(BitConverter.ToString(engine.ComputeHash(

Encoding.UTF8.GetBytes(string.Concat(salt, line)))));

}

}

// Storing all the corresponding values in memory to speed up the process

hashes = new List<string>(File.ReadAllLines(fileTargetHash));

List<Task> tasks = new List<Task>();

using (StreamReader reader = new StreamReader("passwords.txt"))

{

string line;

List<string> hypotheses = new List<string>();

while ((line = reader.ReadLine()) != null && limit-- >= 0)

{

hypotheses.Add(line);

if (hypotheses.Count == 100)

{

Task t = new Task(new Action<object>(Treatment), new List<string>(hypotheses));

tasks.Add(t);

t.Start();

hypotheses.Clear();

}

}

}

foreach (Task t in tasks)

t.Wait();

}

private void Treatment(object hypotheses)

{

// Treating every hypothesis consists in checking that, by applying this salt on "password",

// we obtain a hash contained in the target file. If this is so, there are statistically good chances

// that we have found the has salt.

SHA1 engine = SHA1CryptoServiceProvider.Create();

List<string> Results = new List<string>();

foreach (string hypothesis in hypotheses as List<string>)

{

string hash = BitConverter.ToString(engine.ComputeHash(

Encoding.UTF8.GetBytes(string.Concat(hypothesis, "password"))));

if (hashes.Contains(hash))

{

Debug.WriteLine(string.Format("Il y a de bonnes chances pour que {0} soit le sel", hypothesis));

File.AppendAllText(string.Concat(

"ResultatRechercheSel-",

DateTime.Now.ToString("yyyy-MM-dd-hh-mm-ss")), hypothesis);

Environment.Exit(0);

}

}

}

}

The very first wave of attempted values for the salt (hypotheses), for a brute force attack, is of course using the same password dictionary, and use them as prefix. Then, we would try them as suffix, then as both, then we would integrate additional symbols, etc.

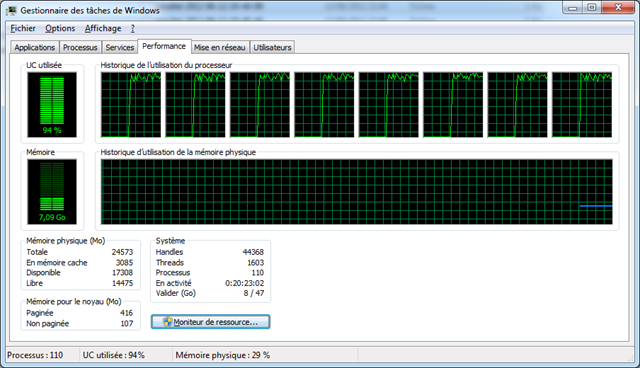

Results

This first approach gets the salt in only a few minutes. It just happens that the salt I often use as an example with my students is the 1 698 685th entry in the password dictionary.

First brute force attack on a simple salt over a 6.5 millions hash values file : solution found in 4 minutes !

We could of course make the salt more complex, for example with “Zorglub64” as a prefix, and “Batman28” as a suffix, but there would be something quite artificial in adapting the algorithm to what we are looking for.

Moreover, what we wished to demonstrate has already been so through this example : right from the time where we know there were good chances of knowing one of the password and if we have a large enough set, using a unique salt for all the values is almost useless. Instead of multiplicating the search cases, the salt only adds a step in the attack. In statistical extremes, we could at best multiply the attacking time by two, which is of course far from enough.

Summary

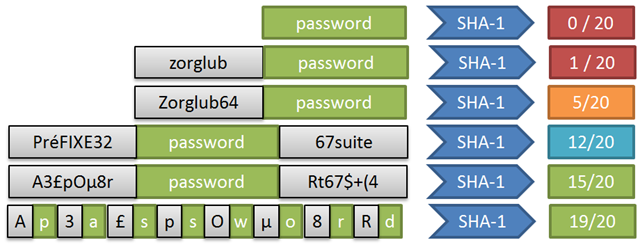

A small table to sum up the situation :

In short, if you expose a large number of hash values and some passwords are bad quality ones (for example among the 25 most common ones), even if their proportion is very low, a simple salt will simply not add any security.

The whole idea behind is that the sheer volume of data, instead of slowing down the attack, on the contrary gives more chances to quickly validate an hypothesis on the value of the salt. The conclusion is that a salt should not be unique for large volumes, otherwise it is losing some of its efficiency.

A solution

The explanation of the problem was quite long, but the solution to it is quite simple : one should not use a single salt for large volumes of passwords. We could for example imagine a variable salt (for example based on the hash of the creation date of a user), or even an exogen variable, like a unique account identifier (but definitely not the login itself : the only thing that prevented the LeakedIn file to being an absolute catastrophy was that the login values were not in the same file).

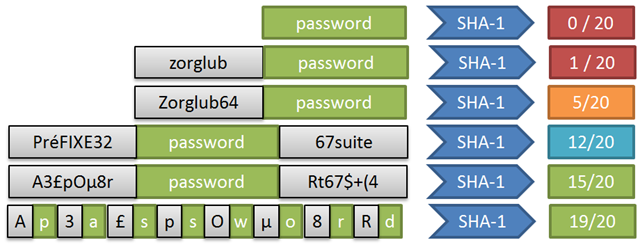

Here is a proposal of ranking different modes for using a salt over a reference of twenty (the password target for the hash is in green, and the salt in grey) :

There is no 20/20 grade, as no method is 100% secure. Even SHA-1 in itself is not free from potential risks : collisions-generating algorithms have been found that are quick enough to represent an actual problem (http://people.csail.mit.edu/yiqun/SHA1AttackProceedingVersion.pdf, or for something a little bit easier to read, http://www.rsa.com/rsalabs/node.asp?id=2927). If you use SHA-1 for providing digital signature, you may have an interest in using a more modern algorithm, like SHA-256.

Beware of the trap of false security given by “exotic” methods, such as inverting letters of the password, etc. Whatever you imagine, these methods are quite predictible for someone with a bit of imagination, and malicious users definitely have a lot of it. Once such a method is included in an automatic attack tool, you are back to zero security…

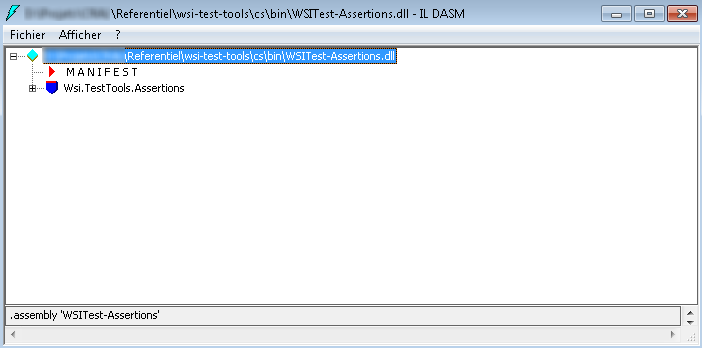

![image_thumb[3] image_thumb[3]](http://gouigoux.com/blog/wp-content/uploads/2013/06/image_thumb3_thumb.png)